Contact Responsibility as a Solution to AV Liability

By Matthew Wansley*

Human drivers are a menace to public health. In 2019, 36,096 Americans were killed in motor vehicle crashes, and an estimated 2.74 million Americans were injured. Most crashes aren’t “accidents.” The National Highway Traffic Safety Administration estimates that driver error is the critical reason for 94% of crashes. The deployment of autonomous vehicles (AVs)—likely in the form of robotaxis—will make transportation safer. AVs will cause fewer crashes than conventional vehicles (CVs) because software won’t make the kinds of errors that drivers make. AVs won’t drive drunk, get drowsy, or be distracted. They won’t speed, run red lights, or follow other vehicles too closely. They will drive cautiously and patiently. AVs will consistently drive like the safest human drivers drive at their best.

But AVs could be even safer, I argue in a forthcoming article, The End of Accidents (forthcoming in the U.C. Davis Law Review). AVs could be designed not only to avoid causing their own errors, but also to reduce the consequences of errors by human drivers, cyclists, and pedestrians. AVs can monitor their surroundings better and react more quickly than human drivers. AV technology has the potential to make better predictions and better decisions than humans can. AVs could be designed to anticipate when other road users will drive, bike, or walk unsafely and to prevent those errors from leading to crashes or make unavoidable crashes less severe. As long as AVs share the roads with humans, improving AV technology’s capability to mitigate the consequences of human error will save lives.

Liability rules will influence how much AV companies invest in developing safer technology. Existing products liability law creates insufficient incentives for safety because AV companies can reduce their liability for a crash by showing that the plaintiff was comparatively negligent. A comparative negligence defense will be a powerful liability shield because the kinds of errors that human drivers make—violating traffic laws and driving impaired—are the kinds of errors that human jurors recognize as negligence. A liability regime with a comparative negligence defense only creates incentives for AV companies to develop behaviors that AV technology has already mastered: driving at the speed limit, observing traffic signals, and maintaining a safe following distance. It won’t push AV companies to develop software that can reliably anticipate human error and take evasive action.

Data from real-world AV testing shows that AVs are rarely causing crashes but are failing to avoid plausibly preventable crashes. In October 2020, the leading AV company, Waymo, released a report of every contact between its prototype robotaxis and other vehicles, bicycles, or pedestrians during its 6.1 million miles of autonomous driving in 2019. At the current stage of testing, Waymo’s AVs usually have a backup driver behind the wheel, ready to take over manual control if necessary. Waymo’s report includes every actual contact during autonomous operation and every contact that would have happened, according to Waymo’s simulation software, if the backup driver hadn’t taken over manual control. If the report is reliable, almost every contact in the 6.1 million miles involved human error. In fact, in most cases, it’s not even arguable that the AV made an error that contributed to the contact.

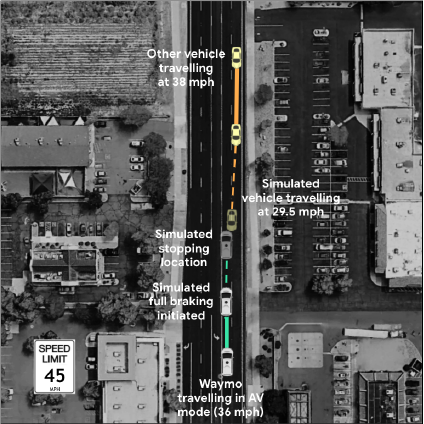

Waymo’s report also reveals, however, that its AVs sometimes fail to avoid plausibly preventable crashes caused by human error. Consider the scenario depicted below. Late one night in 2019, a Waymo AV was travelling in the left lane of a divided road in the suburbs of Phoenix. A CV was traveling in the wrong direction in the right lane veering towards the AV. The backup driver took over manual control. According to Waymo’s simulation, if the backup driver hadn’t taken over, the CV would have crashed head-on into the AV. The force of the collision would have caused the AV’s airbag to deploy. The AV would have braked, but not swerved out of the way. The CV’s driver likely wouldn’t have swerved out of the way either because the driver was likely “significantly impaired or fatigued.”

Waymo doesn’t clarify whether its backup driver was able to avoid a crash. But it’s quite possible that the backup driver was able to avoid it. Evolution has armed humans with a powerful survival instinct. The backup driver should have had room for evasive maneuvers on the wide suburban road late at night. Yet Waymo’s AV software—software that would drive 6.1 million miles without causing a crash that same year—wouldn’t have prevented an apparently preventable head-on collision.

Consider the simulated crash from a liability perspective. Suppose there had been no backup driver, and the vehicles collided. Assume, consistent with the report, that the driver of the CV was drunk. Would the drunk driver prevail against Waymo in a lawsuit? Almost certainly not. The question itself sounds absurd. Drunk driving that results in a crash is negligence per se. Waymo’s comparative negligence defense would dispose of the case. Because Waymo would avoid liability for the crash, it would have little incentive to develop technology that could prevent a similar crash in the future.

Now consider the same simulated crash from a social welfare perspective. Would the social benefits of technology that could prevent a crash like this exceed the cost of development? Likely yes. Drunk driving is common. Drivers, impaired or not, sometimes drive in the wrong direction. AV technology’s ability to monitor the environment more consistently and react more quickly gives AVs advantages over CVs in responding to impaired drivers. If AV companies invest in developing better behavior prediction and decision-making capabilities, they could design AVs that would dramatically reduce the social costs of drunk driving. AVs could become superhuman defensive drivers, preventing not only crashes like this one but also crashes that now seem unpreventable.

Investments in developing safer AV software will be highly cost-effective because the software will be deployed at scale. When an AV company develops code that enables its AVs to prevent a crash in a certain kind of traffic scenario—and doesn’t make them less safe in other scenarios—it will add the new code to the software that runs on all the AVs in its fleet. The improved code will prevent a crash every time one of the company’s AVs encounters a similar traffic scenario for the rest of history. As engineers change jobs or share ideas, the fix will spill over to other AV companies’ fleets. From a social welfare perspective, the return on investments in developing safer AV technology will be tremendous.

AV companies will only develop AV technology’s full crash prevention potential if they internalize the costs of all preventable crashes. But determining which crashes could be efficiently prevented with yet-to-be developed AV technology would be exceedingly difficult for jurors, judges or regulators. AV technology may achieve safety gains not just by mimicking the behavior of an expert human driver but by exhibiting emergent behavior—behavior that would seem alien to a human observer. The better approach is to treat all crashes involving AVs as potentially preventable. In The End of Accidents, I defend a system of absolute liability for AV companies that I call “contact responsibility.” Under contact responsibility, AV companies would pay for the costs of all crashes in which their AVs come into contact with other vehicles, persons, or property unless they could show that the party seeking payment intentionally caused the crash. No crash involving an AV would be considered an accident.[1]

Contact responsibility would align the private financial incentives of AV companies more closely with public safety. AV companies will collect massive amounts of data on driver, cyclist, and pedestrian behavior as their fleets of AVs passively record their surroundings. Contact responsibility will push AV companies to sift through that data to find opportunities to prevent crashes efficiently. In many cases, the solution will be developing safer technology. If a company’s AVs are frequently being hit in intersections by CVs that run red lights, the company might develop software that can more reliably predict when CVs won’t stop at traffic signals. In other cases, the solution may be deploying AVs differently. The company might plan routes for its robotaxis that avoid especially dangerous roads at certain times of day. In still other cases, the solution may be political. The company might use its money to lobby for protected bike lanes, mandatory ignition interlocks, or the development of a vehicle-to-vehicle communication network.

Contact responsibility might sound radical because it would insulate human drivers from tort liability for crashes they cause negligently or even recklessly. One might worry that this would create a moral hazard risk. But liability plays at most a modest role in deterring unsafe driving. Human drivers tend to cause crashes by breaking traffic laws and driving impaired. Under contact responsibility, the civil and criminal penalties for those violations would continue to provide deterrence. Drivers would also still face liability for crashes with other CVs, cyclists, and pedestrians. They would still face the possibility that their insurers would raise their premiums after a crash with an AV, even though they weren’t held liable, because the crash indicated they had a higher risk of crashing with a CV. Most importantly, drivers would still want to avoid the risk of injuring themselves or others. Contact responsibility wouldn’t diminish those deterrents. It would simply target liability incentives where they will be most useful: AV companies’ investment decisions.

In recent years, several scholars have proposed reforms to adapt tort law to crashes involving AVs.[2] The debate has yielded valuable insights, but it has been conducted almost entirely from the armchair. Now that data on AV safety performance is publicly available, it’s possible to make more informed predictions about the real-world consequences of different liability rules. The data suggest that AV crashes will follow a predictable pattern. AVs will rarely cause crashes. But they will fail to avoid plausibly preventable crashes caused by other road users. Therefore, it’s critical for liability reform to address whether AV companies will be responsible when a negligent or reckless human driver causes a crash with an AV. Scholars who have considered the issue of comparative negligence have advocated retaining some form of the defense.[3] In fact, the leading reform proposal expressly rejects AV company responsibility for “injury caused by the egregious negligence of a CV driver, coupled with minimal causal involvement by the [AV].”[4] I argue that absolving AV companies from responsibility for those injuries would be a mistake. Contact responsibility is the only liability regime that will unlock AV technology’s full crash prevention potential.

[1] For crashes between AVs, I endorse Steven Shavell’s “strict liability to the state” proposal. See Steven Shavell, On the Redesign of Accident Liability for the World of Autonomous Vehicles 2 (Harvard Law Sch. John M. Olin Ctr., Discussion Paper No. 1014, 2019), http://www.law.harvard.edu/programs/olin_center/papers/pdf/Shavell_1014.pdf.

[2] See generally Kenneth S. Abraham & Robert L. Rabin, Automated Vehicles and Manufacturer Responsibility for Accidents: A New Legal Regime for a New Era, 105 Va. L. Rev. 127 (2019); Mark A. Geistfeld, A Roadmap for Autonomous Vehicles: State Tort Liability, Automobile Insurance, and Federal Safety Regulation, 105 Calif. L. Rev. 1611 (2017); Kyle D. Logue, The Deterrence Case for Comprehensive Automaker Enterprise Liability, 2019 J. L. & Mobility 1; Bryant Walker Smith, Automated Driving and Product Liability, 2017 Mich. St. L. Rev. 1;David C. Vladeck, Machines Without Principals: Liability Rules and Artificial Intelligence, 89 Wash. L. Rev. 117 (2014).

[3] See, e.g., Mark A. Lemley & Bryan Casey, Remedies for Robots, 86 U. Chi. L. Rev. 1311, 1383 (2019).

[4] Abraham & Rabin, supra note 2, at 167.

* Matthew Wansley researches venture capital law and risk regulation as an Assistant Professor of Law at the Benjamin N. Cardozo School of Law. Prior to joining the Cardozo faculty, he was the General Counsel of nuTonomy Inc., an autonomous vehicle startup, and a Climenko Fellow and Lecturer on Law at Harvard Law School. He clerked for the Hon. Scott Matheson on the U.S. Court of Appeals for the Tenth Circuit and the Hon. Edgardo Ramos on the U.S. District Court for the Southern District of New York.